Table of Contents

Web scraping is a powerful technique for extracting large volumes of data from websites, but it often runs up against obstacles like IP blocks, rate limits, and geo-restrictions. Without proxies, even the best-designed scraping logic will eventually hit a wall - websites will detect too many requests from the same IP and deny access. Proxy scrapers and proxy services are therefore essential tools: they provide pools of IP addresses and rotation capabilities that let scrapers distribute requests across many identities, greatly reducing the chance of being blocked. In the sections below we explain what proxy scrapers are, why proxies matter for scraping, what to look for in a proxy service, and highlight some leading providers.

What Is a Proxy Scraper?

A proxy scraper is a tool or program that automatically collects proxy server addresses from various online sources. In practice, a proxy scraper will crawl proxy listing sites, forums, and even code repositories to collect proxy IPs. It parses the gathered data, filters proxies by attributes like location or response time, and outputs a usable list. Proxy scrapers thus allow users to obtain many free proxy IPs at once (often scraped from public lists, free proxy sites, or forums). The proxies found by a scraper typically come with no guarantee of quality – the tool may test each proxy for availability and latency, discarding any that are dead or too slow.

In essence, a proxy scraper extracts proxy IP addresses from open sources on the web. However, it’s worth noting that the proxies obtained this way are generally less reliable and can be risky (we discuss that below).

A high-quality proxy service, by contrast, maintains its own proxy pool (often with residential or datacenter IPs) and provides them via an API or dashboard. These services usually manage rotating proxies for you and support automation – features that dedicated scrapers lack. In short, a proxy scraper is a means to find proxies (often free ones), while a proxy service is a provider you subscribe to for reliable, managed proxies.

Why Use Proxies for Web Scraping?

Using proxies is crucial for scalable, stealthy scraping. Three main benefits are:

Avoid IP Blocking and Rate Limits

By routing your requests through a diverse pool of IP addresses, you prevent any one IP from making too many requests. Most websites monitor request volumes per IP and will block or throttle an IP making too many hits. With proxies, you can distribute requests across hundreds or thousands of IPs.

For example, rotating proxies allow you to mimic natural, distributed traffic patterns by ensuring no single address is making an overwhelming number of requests. This greatly lowers the risk of triggering anti-bot measures or rate limits.

Proxies also let you bypass geo-restrictions: you can choose IPs from specific countries or cities so your requests appear to come from those locations. This is particularly useful for accessing region-locked content (like country-specific search results or shopping sites). In practice, proxies help your scraping traffic look more human, more distributed, and more natural to the target websites.

Maintain Anonymity and Security

Every time you use a proxy, the target website sees the proxy’s IP address instead of your own. This conceals your true IP and network identity, making it harder to trace scraping activity back to you or your servers.

As one write-up notes, proxies allow you to interact with websites more discreetly by concealing your true identity online. If a proxy IP is blocked or blacklisted, your own connection is not affected, you can simply rotate to another proxy. In short, proxies act as a protective layer for your scraper. Even if your scraping is detected, it’s the proxy’s IP that gets flagged, not your home IP.

Access Geo-Restricted Content

Many websites serve different data based on your geographic location, or outright block requests from certain countries. By using a proxy from a different region, you can appear to be located there and retrieve the local version of the site. For instance, a site that only shows French prices will be accessible if you route through a French IP proxy.

In summary, proxies enable large-scale scraping by preventing any single IP from being overwhelmed or blocked, ensure your traffic remains more anonymous, and let you circumvent geo-based restrictions.

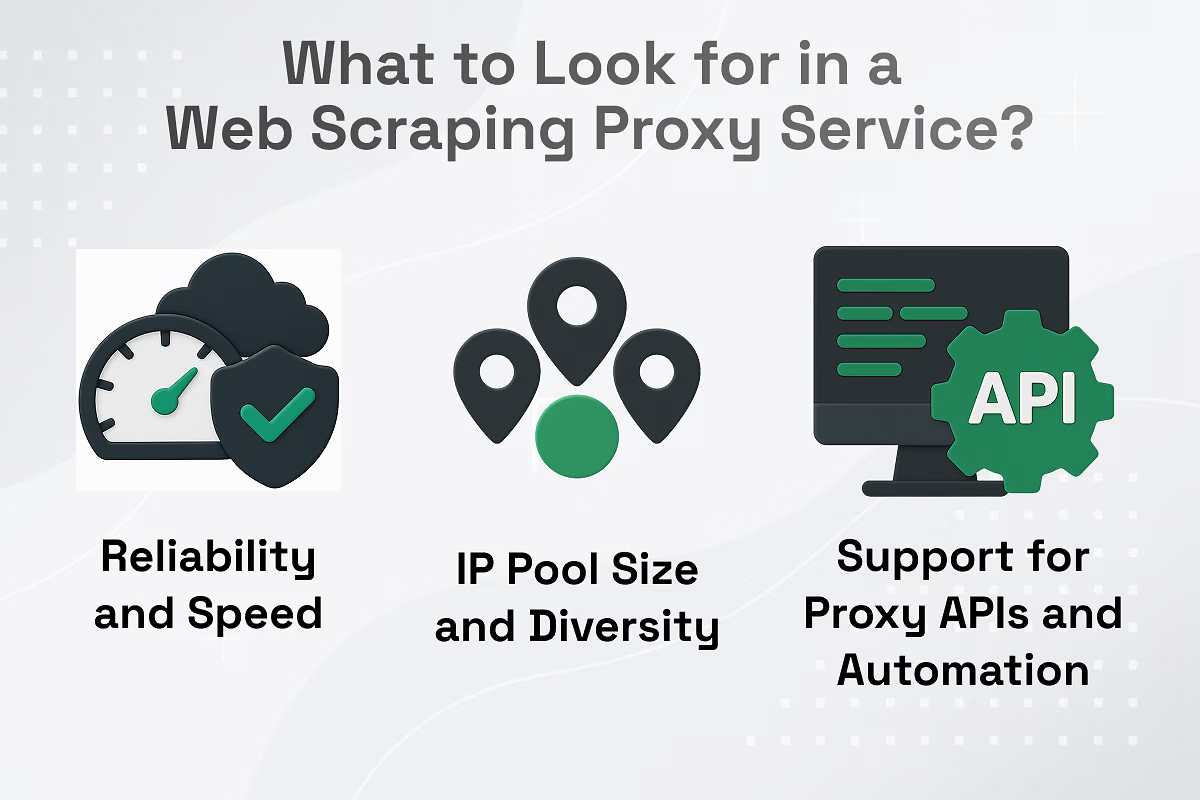

What to Look for in a Web Scraping Proxy Service

When evaluating a proxy provider for scraping, consider these factors:

Reliability and Speed

A good proxy service should have high uptime, fast response times, and robust infrastructure. Look for providers that guarantee 24/7 availability and use data centers or residential networks with high bandwidth. In practical terms, you want proxies that rarely go offline and can handle high request rates without timing out.

IP Pool Size and Diversity

The larger and more varied the proxy pool, the better. A big IP pool (millions of addresses) gives you more anonymity and reduces the chance that two of your scraping sessions accidentally use the same IP. It also enables smooth rotation policies. Providers should offer proxies from many countries and autonomous systems. Diversity is also key: you’ll want a mix of residential, datacenter, and possibly mobile IPs. This ensures you have the right tool for the job – residential IPs are harder to detect on sensitive sites, while datacenter proxies might offer higher speed for routine tasks. A broad network lets you target specific locales or ISPs as needed (for example, for testing localized content or avoiding blocks on certain platforms).

Support for Proxy APIs and Automation

For seamless scraping, it’s very helpful if the proxy service offers an API or developer tools. A good proxy API lets you programmatically request fresh proxies, rotate them, and handle sessions without manual intervention. Floxy, for instance, offers a developer-friendly dashboard & API that works with automated scraping and SEO tasks.

Bright Data likewise provides a comprehensive API for launching scraping jobs and rotating proxies. Having API access means you can integrate proxies directly into your code or scraping framework: for example, your scraper can automatically fetch a new proxy endpoint from the API whenever it needs to rotate. Also check that the provider’s documentation and support are strong, since good docs and quick support will save headaches when configuring proxies in your tools.

Best Proxy Service for Web Scraping

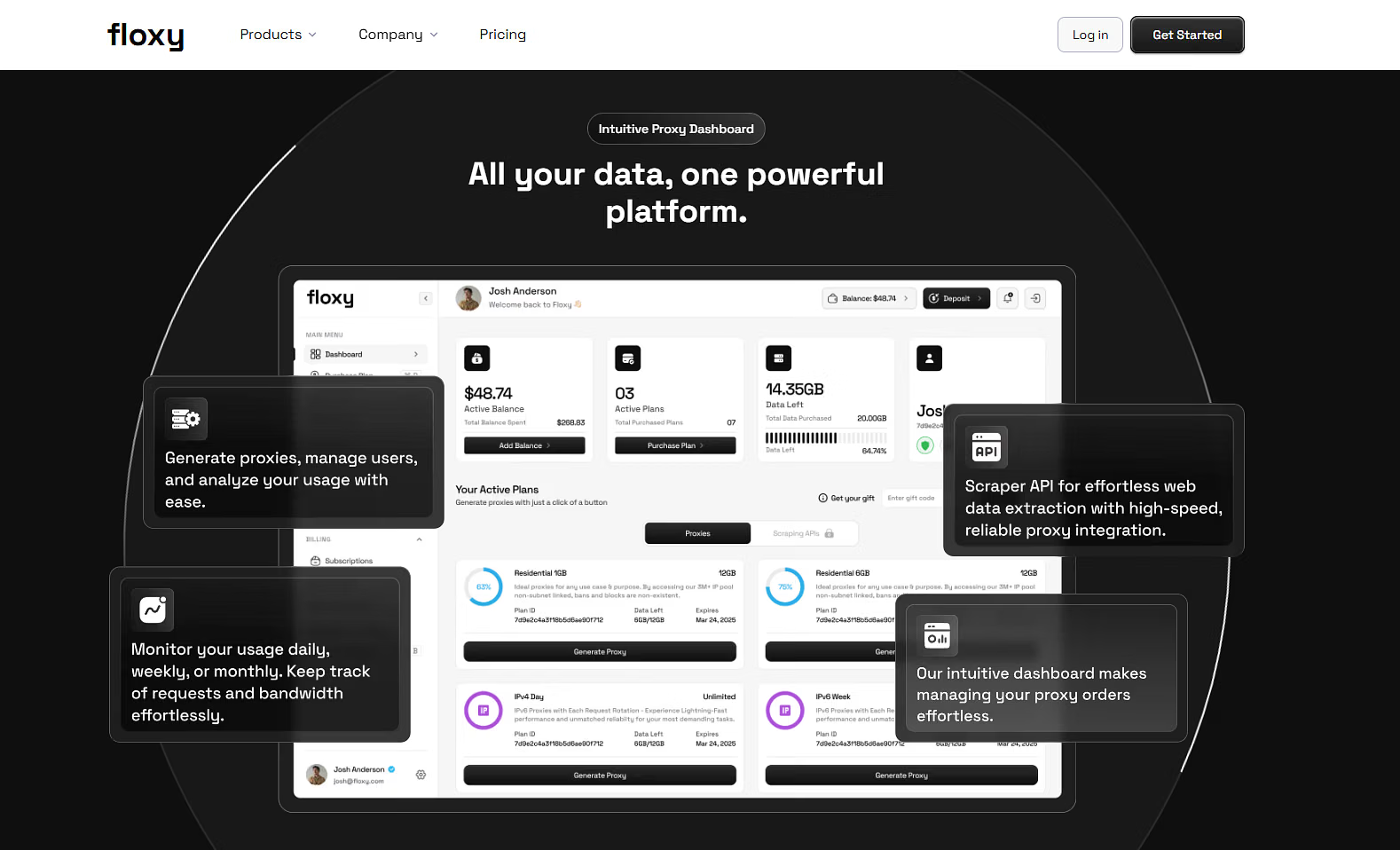

Floxy is a newer proxy service that specializes in scraping, SEO, and automation use cases. We offer both residential and datacenter rotating proxies, along with mobile and ISP proxies and emphasize easy automation. Our key features include automatic IP rotation with geo-targeting, a developer-friendly dashboard, and a public API. In practice, Floxy users report that the proxies are fast, stable, and reliable.

Built for ease of use, Floxy's service provides an API supporting over 93 programming languages and a dashboard, letting developers quickly configure proxy rotation, session length, and location (city or country) per request. Floxy is a great choice for both small projects and scalable data collection. We offer a gateway to scalable, reliable proxies and focus on simplifying the hardest part of web data collection: reliable access. Overall, Floxy stands out for a combination of performance and user-friendliness.

How to Set Up and Use a Proxy Scraper

Using proxies effectively requires proper setup. Here are the key steps:

Choose the Right Proxy Service

Based on your needs (budget, scale, target sites), select a proxy provider (see the options above). Consider starting with a short-term plan or trial to test performance. Verify the service supports the proxy types you need (residential vs datacenter, desired geolocations, etc.) and has an API or easy integration.

Obtain Credentials and Proxy Endpoints. Sign up with the provider and obtain your API key or authentication tokens. Note the proxy endpoints or hostnames and ports the service provides. Many providers offer a single gateway URL or IP address that dynamically rotates your session. For example, Floxy, Bright Data and other providers allow you to generate URLs like http://username:password@proxy_type.floxy.io:5959/ that automatically route through their proxy pool, where “proxy_type” can be ISP, residential, etc.

Integrate Proxies into Your Scraper

- Many tools (Scrapy, Selenium, etc.) have similar proxy settings. The important part is inserting the proxy’s host:port and your credentials as required. If the provider offers an API endpoint that returns proxy details, call that first in your code and parse the result into this format.

- Enable Rotation and Geo-Targeting. If your provider supports it, configure automatic rotation. For example, Floxy’s API allows you to specify how often to rotate IPs and whether to fix to a particular country. If doing manually, ensure your scraper requests a fresh proxy periodically (e.g. after every N requests or upon detecting a block). When geo-specific data is needed, set the location parameters provided by your proxy service (e.g. target a US city or UK IP). Many services supply detailed docs on how to format these API calls.

- Test and Monitor. Once set up, run some tests. Ensure your requests succeed and responses are coming from the proxy’s IP (not your own). It’s wise to monitor proxy performance in real time: check response times, success rates, and error logs. Good providers offer analytics or logs in their dashboard. You may also write your own periodic checks (e.g. ping a known test URL through the proxy) to detect any issues early.

By following these steps, you can seamlessly integrate proxy scraping into your workflow. The key is to let the provider’s API handle the heavy lifting of rotation and pool management, while your scraper code focuses on the target site’s data.

Tips for Successful Web Scraping with Proxies

To get the most out of your proxies, follow these best practices:

- Rotate IPs Regularly. Don’t use a single proxy IP for too many requests. Rotate through your pool frequently to mimic natural traffic. Automatically switching IPs helps avoid IP blocking and maintain anonymity. For instance, you might change the IP every few requests or on a set time interval. Many proxy APIs can do this for you - just configure the rotation rate.

- Use a Mix of Proxy Types. Combine different proxy sources for robustness. Use residential proxies for the most sensitive targets (they appear as normal home users), and datacenter proxies for high-speed tasks. Including mobile proxies can help bypass very strict filters. A mix of proxy types reduces detection risks and ensures access to geo-restricted content. For example, you might primarily use rotating residential IPs but fall back on static datacenter IPs for less-guarded sites.

- Monitor Proxy Performance. Continuously track how your proxies are doing. Check metrics like success rate (fraction of requests that go through), response time, and error rates. Many services provide dashboards or APIs for this. Monitoring lets you detect dead or slow proxies early.

- Respect Usage Patterns. Even with proxies, hit limits gradually. Insert random delays between requests, and vary request headers (like user-agent) to look less bot-like. Don’t bombard the target site with hundreds of quick back-to-back requests from the same proxy. Rotate not just IPs but also behaviors.

- Use Residential Proxies for Tough Targets. For sites with strong anti-scraping defenses (social media, ad networks, ticketing, etc.), use real residential or mobile IPs. These look like normal users to the site’s anti-bot systems, making bans much less likely. Even though they cost more, residential proxies pay off when you must get data from a hard target. In practice, using a high-quality residential proxy can be the difference between success and getting immediately blocked.

Conclusion

Proxies are a cornerstone of effective web scraping. They keep your scraping operation running by spreading requests across many IPs, hiding your identity, and letting you reach region-locked data. The key is choosing a reputable proxy provider with the features you need: look for high uptime, a large and diverse IP pool, and solid API support.

Among the top options, Floxy stands out for its developer-friendly approach: it delivers rotating residential and datacenter proxies with built-in geotargeting and a robust API. Other premium providers like Bright Data, Oxylabs, and Smartproxy also offer powerful solutions for different budgets and scales. By using well-known proxy networks (rather than unverified free proxies), you ensure your scraping is reliable and sustainable. With the right proxy setup, whether via Floxy or another top service, you can scrape the web efficiently and with confidence.